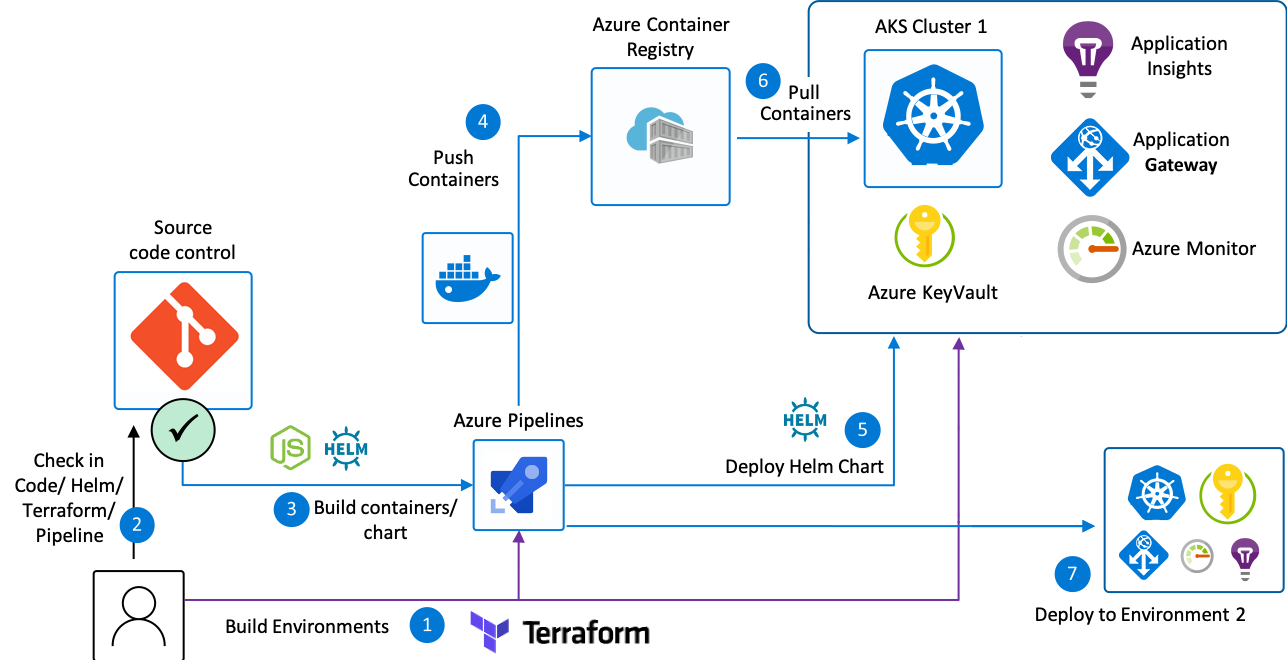

Setting up an Azure Kubernetes Service (AKS) using Terraform, is fairly easy. Setting up a full-fledged AKS cluster that can read images from Azure Container Registry (ACR), fetch secrets from Azure Key Vault using Pod Identity while all traffic is routed via an AKS managed Application Gateway is much harder.

To save others from all the trouble I encountered while creating this one-click-deployment, I've published a GitHub repository that serves as a boilerplate for the scenario described above, and fully deploys and configures your Azure Kubernetes Service in the cloud using a single terraform deployment.

This blog post goes into the details of the different resources that are deployed, why these specific resources are chosen and how they tie into each other. A future blog post will build upon this and explain how Helm can be used to automate your container deployments to the AKS cluster.

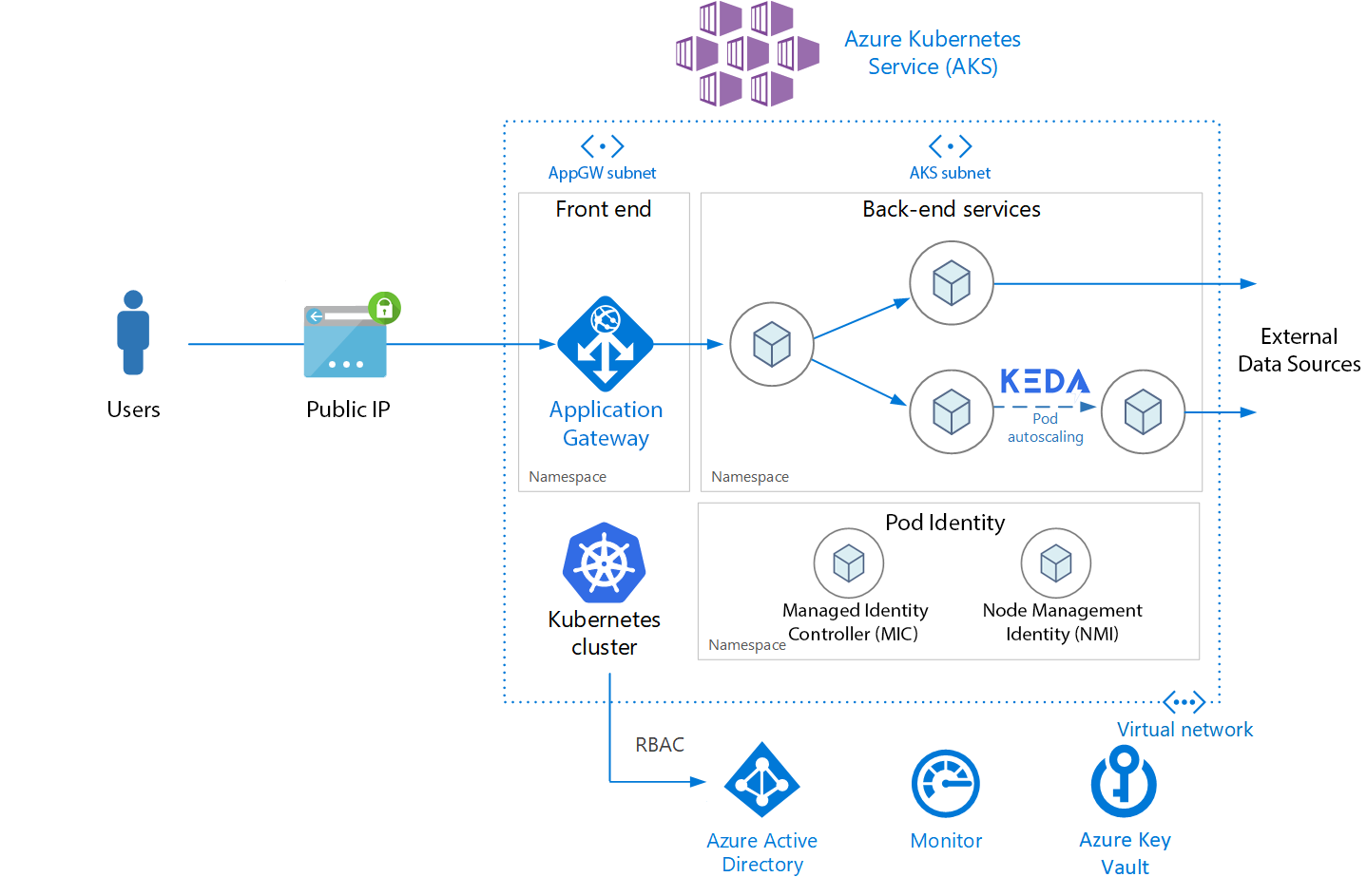

Architecture Overview

Azure Kubernetes Service

Azure Kubernetes Service (AKS) makes it simple to deploy a managed Kubernetes cluster in Azure. Kubernetes is an open-source container orchestration platform that automates many of the manual processes involved in deploying, managing, and scaling containerized applications. Having this cluster is ideal when you want to run multiple containerized services and don't want to worry about managing and scaling them.

Azure Container Registry

Azure Container Registry (ACR) is a managed Docker registry service, and it allows you to store and manage images for all types of container deployments. Every service can be pushed to its own repository in Azure Container Registry and every codebase change in a specific service can trigger a pipeline that pushes a new version for that container to ACR with a unique tag.

AKS and ACR integration is setup during the deployment of the AKS cluster with Terraform. This allows the AKS cluster to interact with ACR, using an Azure Active Directory service principal. The Terraform deployment automatically configures RBAC permissions for the ACR resources with an appropriate ACRPull role for the service principal.

With this integration in place, AKS pods can fetch any of the Docker images that are pushed to ACR, even though ACR is setup as a private docker registry. Don't forget to add the azurecr.io prefix for the container and specify a tag. It is best practice to not use that :latest tag since this image always points to the latest image pushed to your repository and might introduce unwanted changes. Always pinpoint the container to a specific version and update that version in your yaml file when you want to upgrade.

A simple example for a pod that's running a container from the youracrname.azurecr.io container registry, the test-container repository with tag 20210301, is shown below:

---

apiVersion: v1

kind: Pod

metadata:

name: test-container

spec:

containers:

- name: test-container

image: youracrname.azurecr.io/test-container:20210301

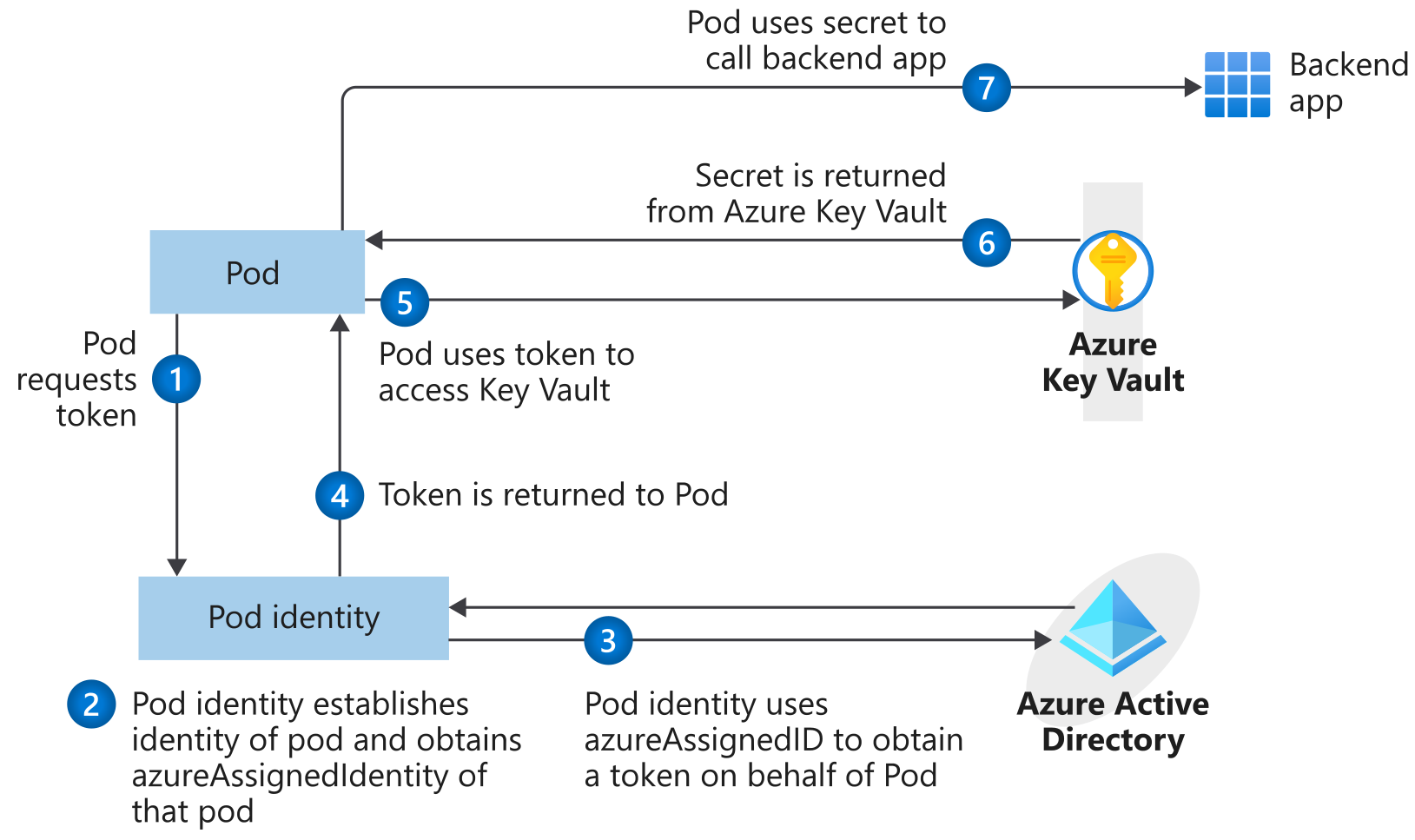

Pod Identity

AAD Pod Identity enables Kubernetes applications to access cloud resources securely with Azure Active Directory. It's best practice to not use fixed credentials within pods or container images, as they are at risk of exposure or abuse. Instead, we're using pod identities to request access using Azure AD.

When a pod needs access to other Azure services, such as Cosmos DB, Key Vault, or Blob Storage, the pod needs access credentials. You don't manually define credentials for pods, instead they request an access token in real time, and can use it to only access their assigned services that are defined for that identity.

Pod Identity is fully configured on the AKS cluster when the Terraform script is deployed, and pods inside the AKS cluster can use the preconfigured pod identity by specifying the corresponding aadpodidbinding pod label.

Once the identity binding is deployed, any pod in the AKS cluster can use it by matching the pod label as follows:

apiVersion: v1

kind: Pod

metadata:

name: demo

labels:

aadpodidbinding: $IDENTITY_NAME

spec:

containers:

- name: demo

image: mcr.microsoft.com/oss/azure/aad-pod-identity/demo:latest

Azure Key Vault Secrets Store CSI Driver

Some of the services in the AKS cluster connect to external services. The connection strings and other secret values that are needed by the pods are stored in Azure Key Vault. By storing these variables in Key Vault, we ensure that these secrets are not versioned in the git repository as code, and not accessible to anyone that has access to the AKS cluster.

To securely mount these connection strings, pod identity is used to mount these secrets in the pods and make them available to the container as environment variables. The flow for a pod fetching these variables is shown in the diagram below:

Luckily, there's no need to to worry about all these different data flows, and we can just use deploy the provided Azure Key Vault Provider for Secrets Store CSI Driver on the AKS cluster. This provider leverages pod identity on the cluster and provides a SecretProviderClass with all the secrets that you want to fetch from Azure Key Vault.

A basic example for setting up a SecretStore from KeyVault kvname, getting secret secret1 and exposing these as a SecretStore named kv-secrets:

apiVersion: secrets-store.csi.x-k8s.io/v1alpha1

kind: SecretProviderClass

metadata:

name: kv-secrets

spec:

provider: azure

parameters:

usePodIdentity: "true" # set to "true" to enable Pod Identitiy

keyvaultName: "kvname" # the name of the KeyVault

objects: |

array:

- |

objectName: secret1 # name of the secret in KeyVault

objectType: secret

tenantId: "" # tenant ID of the KeyVault

To use these secret secret1 from kv-secrets in a pod and making it available in the nginx container with the environment variable SECRET_ENV:

spec:

containers:

- image: nginx

name: nginx

env:

- name: SECRET_ENV

valueFrom:

secretKeyRef:

name: kv-secrets

key: secret1

Application Gateway

All traffic that accesses the AKS cluster is routed via an Azure Application Gateway. The Application Gateway acts as a Load Balancer and routes the incoming traffic to the corresponding services in AKS.

Specifically, Application Gateway Ingress Controller (AGIC) is used. This Ingress Controller is deployed on the AKS Cluster on its own pod. AGIC monitors the Kubernetes cluster it is hosted on and continuously updates an Application Gateway, so that selected services are exposed to the Internet on the specified URL paths & ports straight from the Ingress rules defined in AKS. This flow is shown in the diagram below:

As shown in the overview diagram, the client reaches a Public IP endpoint before the request is forwarded to the Application Gateway. This Public IP is deployed as part of the Terraform deployment on an Azure-based FQDN (e.g. your-app.westeurope.cloudapp.azure.com).

Closing remarks

Lots of details on the inner workings for some of these resources. Fortunately, all of these configurations happen for you and all you need to do is setup the tfvars variables for your environment. Keep an eye out for the upcoming blog post on setting up Helm to automate your container deployments.

What are you waiting for? Clone the repo and start deploying your fully configured AKS cluster.